AGI vs AI: Understanding the Future of Intelligence

Artificial Intelligence (AI) and Artificial General Intelligence (AGI) are terms often tossed around interchangeably, but they represent vastly different concepts shaping our technological future. AI, as we know it today, powers everything from voice assistants to recommendation algorithms. AGI, however, is the horizon of intelligence that could redefine humanity’s relationship with machines.

AI refers to specialized systems designed for specific tasks. Think of Siri recognizing your voice or Netflix suggesting your next binge-watch. These systems excel within narrow domains, leveraging machine learning to process data, identify patterns, and make decisions. However, their capabilities are limited—they can’t step outside their programming. A chess-playing AI, for instance, can’t write poetry or solve physics problems.

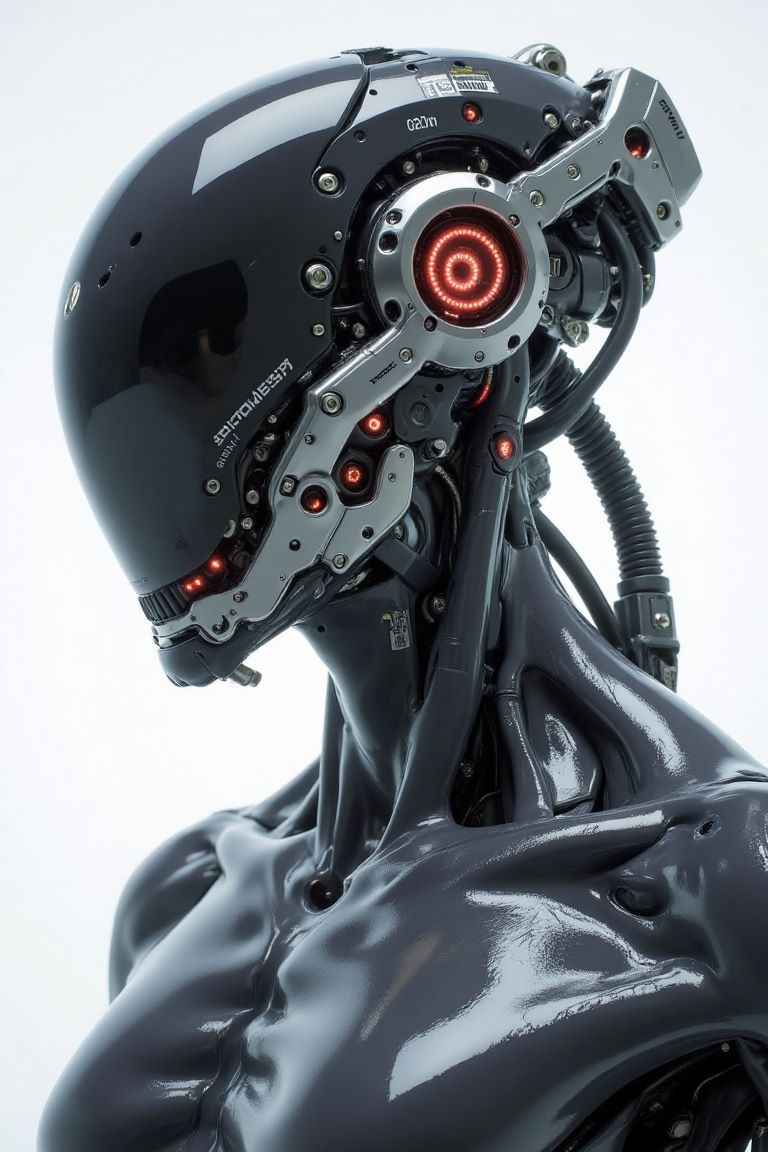

AGI, on the other hand, is the holy grail of cognitive computing. It describes a machine with human-like general intelligence, capable of learning, reasoning, and adapting across diverse tasks without being explicitly programmed. An AGI could seamlessly switch from composing music to diagnosing diseases or even debating philosophy. It’s not just about mastering one skill—it’s about mastering any intellectual challenge a human might tackle.

The leap from AI to AGI is monumental. Current AI thrives on vast datasets and predefined rules, while AGI would require an understanding of context, creativity, and perhaps even consciousness. Researchers at organizations like xAI are pushing toward this frontier, but challenges like computational complexity and ethical concerns loom large.

Why does this matter? AI enhances efficiency; AGI could transform society. From solving climate change to exploring the cosmos, AGI’s potential is boundless—but so are the risks. As we stand on the cusp of this revolution, understanding the distinction is crucial for navigating the future responsibly.